Experimentation & A/B Testing

Remove the guesswork. Start testing what actually works.

We help you test ideas before they become expensive mistakes.

A/B Testing

We design and manage your A/B tests from start to finish.

Multivariate Testing

We handle more advanced test setups when needed.

Test Design

We design clear, focused experiments that are built to learn.

Tracking Setup

We handle event tracking and ensure test data is accurate.

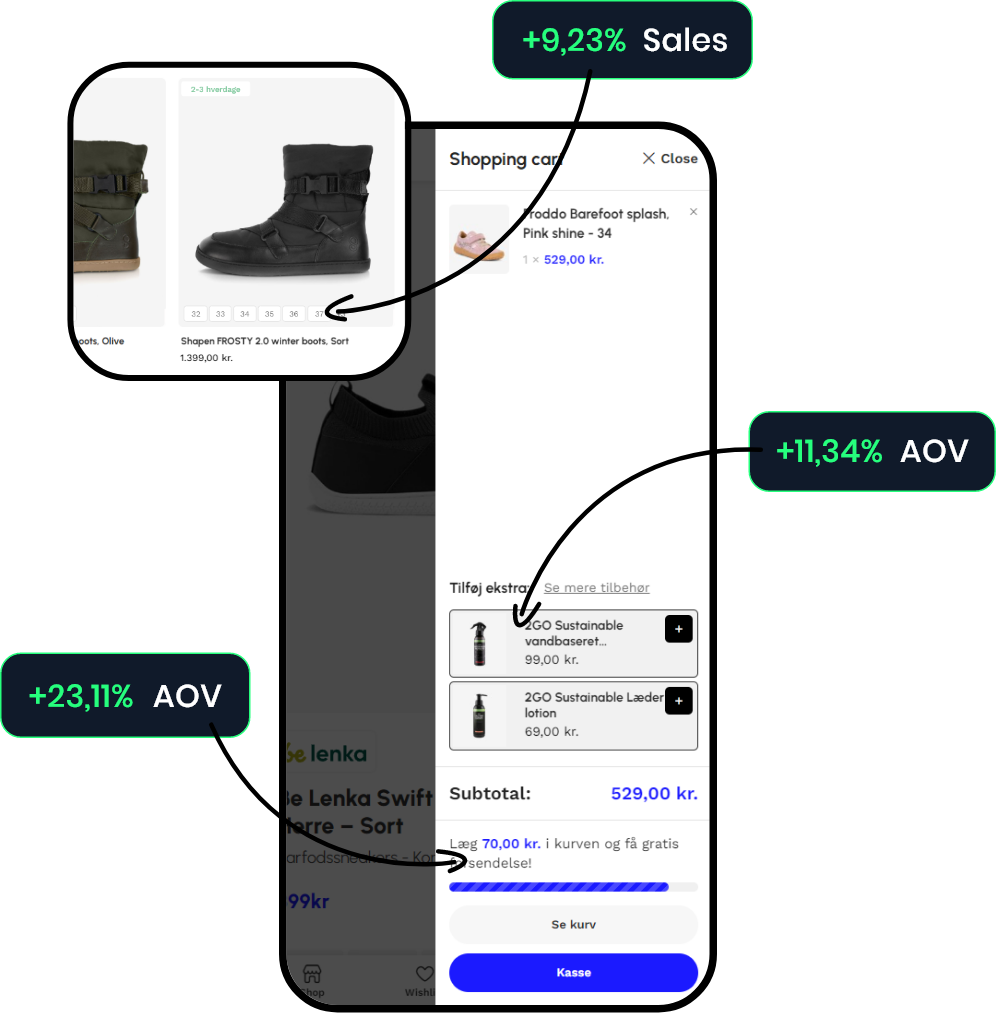

Unlock the hidden revenue on your site

By testing smarter changes, you can unlock growth across your entire funnel. Think higher average order value, better cart completion, stronger messaging, and fewer drop-offs.

- Improve every step.

- Fix leaks.

- Increase sales.

Our Process

This is how we work

Behavioral & Quantitative Research

We analyze user behavior, heatmaps, scroll depth, session replays, and funnel data to identify conversion roadblocks.

Audit & Hypothesis Development

We audit your site and user flows to uncover friction points. Then we create testable hypotheses, prioritized using ICE or PXL frameworks.

Conversion Design & UX Prototyping

We design high-converting page or UI variants based on our hypotheses — using best practices in layout, copy, and flow psychology.

Test Execution & Monitoring

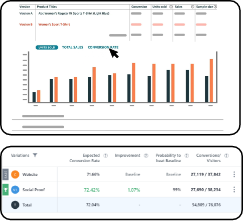

We launch the experiment and monitor it in real time, ensuring statistical validity and consistent test health.

Analysis, Reporting & Iteration

Once the test concludes, we analyze the results, share insights, and apply learnings to the next iteration. Continuous improvement is built in.

Explore our client work

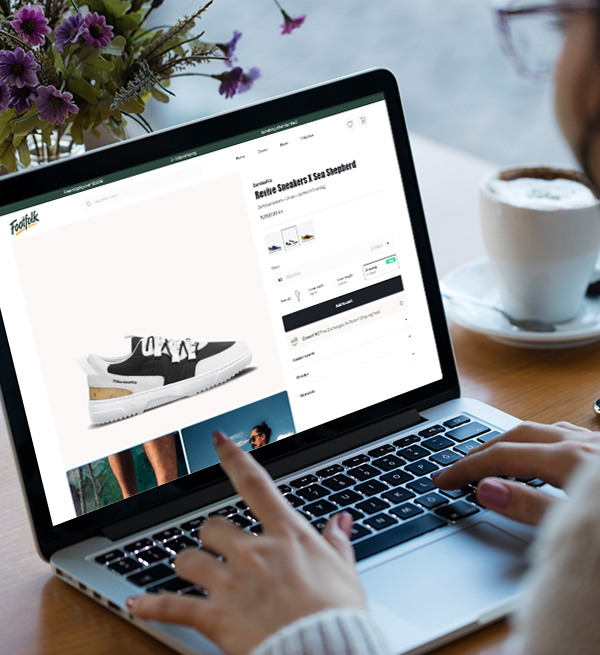

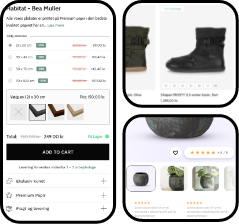

Clearer size guides across all products led to a 13.5% lift in purchase rate and fewer support questions.

Adding size previews in the product grid boosted conversions by 54% and reduced time-to-purchase. all without touching ad spend.

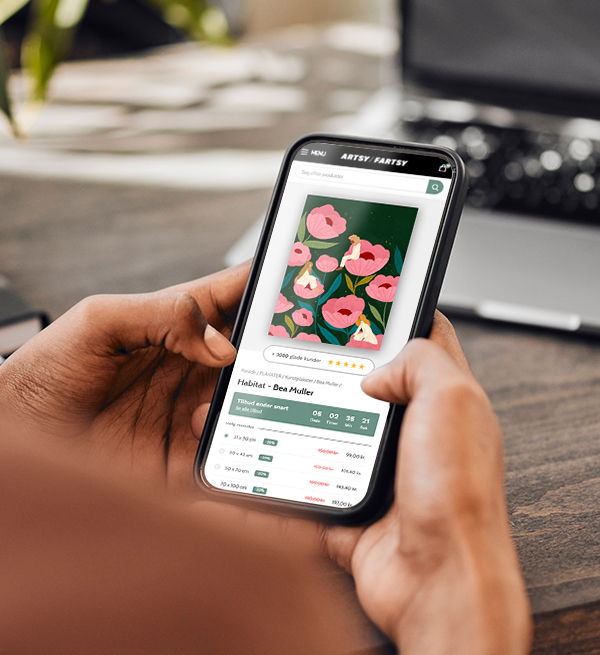

UX tweaks on mobile dropped bounce by 18.7% and cut cart abandonment by 9.2% for Artsy Fartsy.

Frequently asked questions

What is A/B testing and how does it work?

A/B testing is a method of comparing two versions of a webpage or element to see which one performs better. Version A is the original (the “control”), and version B is the variation with one specific change — like a new headline, button color, or layout. Visitors are randomly split between the two versions, and their behavior is tracked (e.g., clicks, signups, purchases). The goal is to find out which version leads to more conversions, based on real user data — not guesswork.

Will testing slow down my website?

When implemented correctly, A/B testing has little to no impact on site speed. We use lightweight, asynchronous testing tools that don’t block or delay page loading. Additionally, our test scripts are optimized for performance and reviewed to avoid conflicts with your existing setup. If speed is a concern, we’ll work with your dev team or platform to ensure that testing is seamless and doesn’t interfere with user experience.

How long does an A/B test usually run?

The length of an A/B test depends on your website traffic and the desired level of statistical confidence. Most tests run between 2 to 4 weeks to gather enough data for a reliable result. We never “rush to a winner” — instead, we wait until the test reaches statistical significance (usually 95% confidence) to make sure the results are accurate and not due to random chance.

What happens after a test ends?

Once a test concludes, we perform a full analysis of the results. If a variation outperformed the original with statistical significance, we recommend implementing it as the new default. We also document what we learned from the test, even if it didn’t produce a winner — because every result adds insight. These learnings feed into the next round of testing, helping us refine the site further and drive continuous improvement.

What is multivariate testing?

Multivariate testing is like A/B testing, but instead of testing one change at a time, it tests multiple elements simultaneously to see which combination performs best. For example, you might test 3 headlines and 2 images at the same time — resulting in 6 total combinations. It’s useful for more advanced optimization when you want to understand how different elements interact. However, it requires significantly more traffic to reach reliable results, so it’s typically used on high-volume sites.

Ready to take the

next step?

Then we are right answer

- When increasing ad-spend no longer works

- When you want to understand your users

- If you are a D2C e-commerce store

- If your traffic is over 5000 visitors/mo